Navigating the Timeline of the EU Artificial Intelligence Act

Key Dates and Implications

The European Union reached a significant milestone in controlling artificial intelligence when the Parliament, Commission, and Council came to a political agreement on the EU Artificial Intelligence Act (AI Act) on December 8, 2023. This critical agreement, followed by unanimous approval from EU member states, opens the way for the world’s first comprehensive AI regulation. The AI Act’s staged implementation timeline seeks to guarantee effective AI governance while encouraging innovation and public trust.

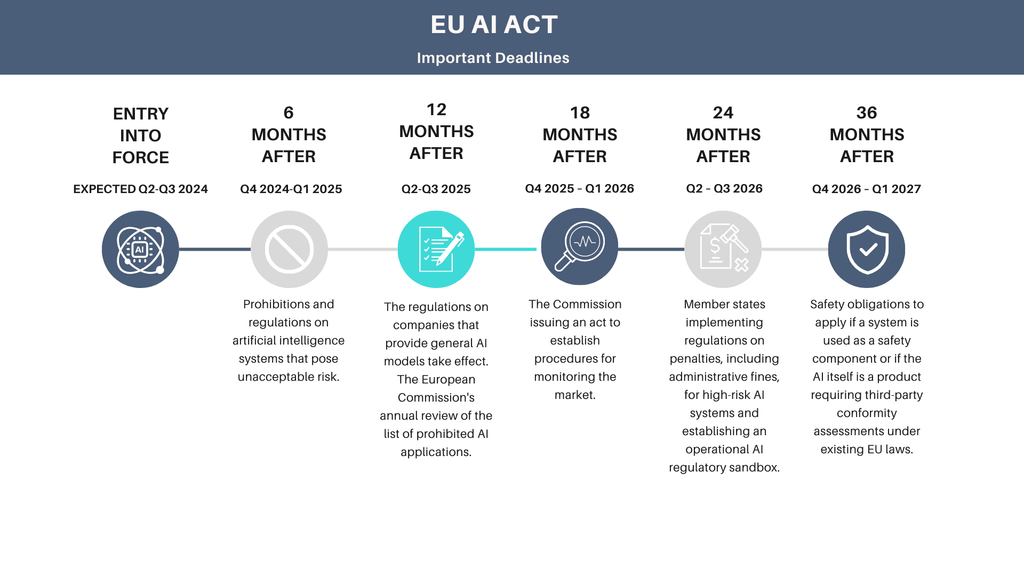

Important Dates & Next Steps:

- Entry into Force (expected Q2-Q3 2024): The AI Act will become effective 20 days after its publication in the Official Journal of the EU.

- Entry into Application: This phase starts 24 months after entry into force, with some specific provisions taking effect earlier.

- Six Months After Entry into Force (Q4 2024-Q1 2025): Prohibitions on unacceptable risk AI become effective.

- Twelve Months After Entry into Force (Q2-Q3 2025): Obligations on providers of general-purpose AI (GPAI) models begin, along with the appointment of member state competent authorities and the annual Commission review of prohibited AI.

- Eighteen Months After Entry into Force (Q4 2025 – Q1 2026): The Commission implements acts on post-market monitoring.

- Twenty-Four Months After Entry into Force (Q2 – Q3 2026): Obligations on high-risk AI systems listed in Annex III, penalties, and the establishment of AI regulatory sandboxes by member states are anticipated.

- Thirty-Six Months After Entry into Force (Q4 2026 – Q1 2027): Obligations for high-risk AI systems not prescribed in Annex III become effective.

- By the End of 2030: Certain AI systems, such as those in large-scale IT systems established by EU law, will have their obligations enforced.

The AI Act defines four levels of risk in AI: unacceptable risk, high risk, limited risk and minimal risk. Different risk levels are associated with different responsibilities, up to the point of prohibition. The regulatory framework prohibits AI systems that pose ‘unacceptable risk’, such as those that use biometric data to infer sensitive traits. These AI systems will be banned. High-risk applications, used for example in employment, law enforcement, or critical infrastructure, will be subject to strict obligations before put on the market. For instance, developers must demonstrate that their models are safe, transparent and explainable to users, and that they adhere to privacy laws and do not discriminate. For lower-risk AI tools, such as chatbots users need to be informed that they are interacting with an AI-generated machine.

The AI Act applies to models operating in the EU, and any company that violates the rules risks. As the AI Act moves through its implementation phases, stakeholders will closely observe its impact on AI development, innovation, and societal trust. Through a collaborative and dynamic regulatory dialogue, the EU aims to establish a framework that ensures the responsible and ethical use of AI while driving technological advancement and protecting fundamental rights.

The European Commission’s plan to establish an AI Office to regulate general-purpose AI models will support the enforcement, with guidance from independent experts. This office will develop methods to assess the abilities of these models and track associated risks.

In conclusion, the European Union’s Artificial Intelligence Act marks a pioneering step towards establishing a comprehensive regulatory framework for AI technologies. With its political agreement reached on December 8, 2023, and a structured timeline for phased implementation, the AI Act is poised to set a global precedent for AI governance. By delineating clear obligations for AI systems based on their risk levels, and setting forth a mechanism for enforcement and oversight through the creation of an AI Office, the EU indicates the commitment to leading the charge in responsible AI usage. As this legislative roadmap unfolds, its impact on the worldwide AI scene, technological advancement, and community well-being will be significant and widespread, signaling the dawn of a new age in ethical AI management.